POST https://api.playment.io/v0/projects/<project_id>/jobs

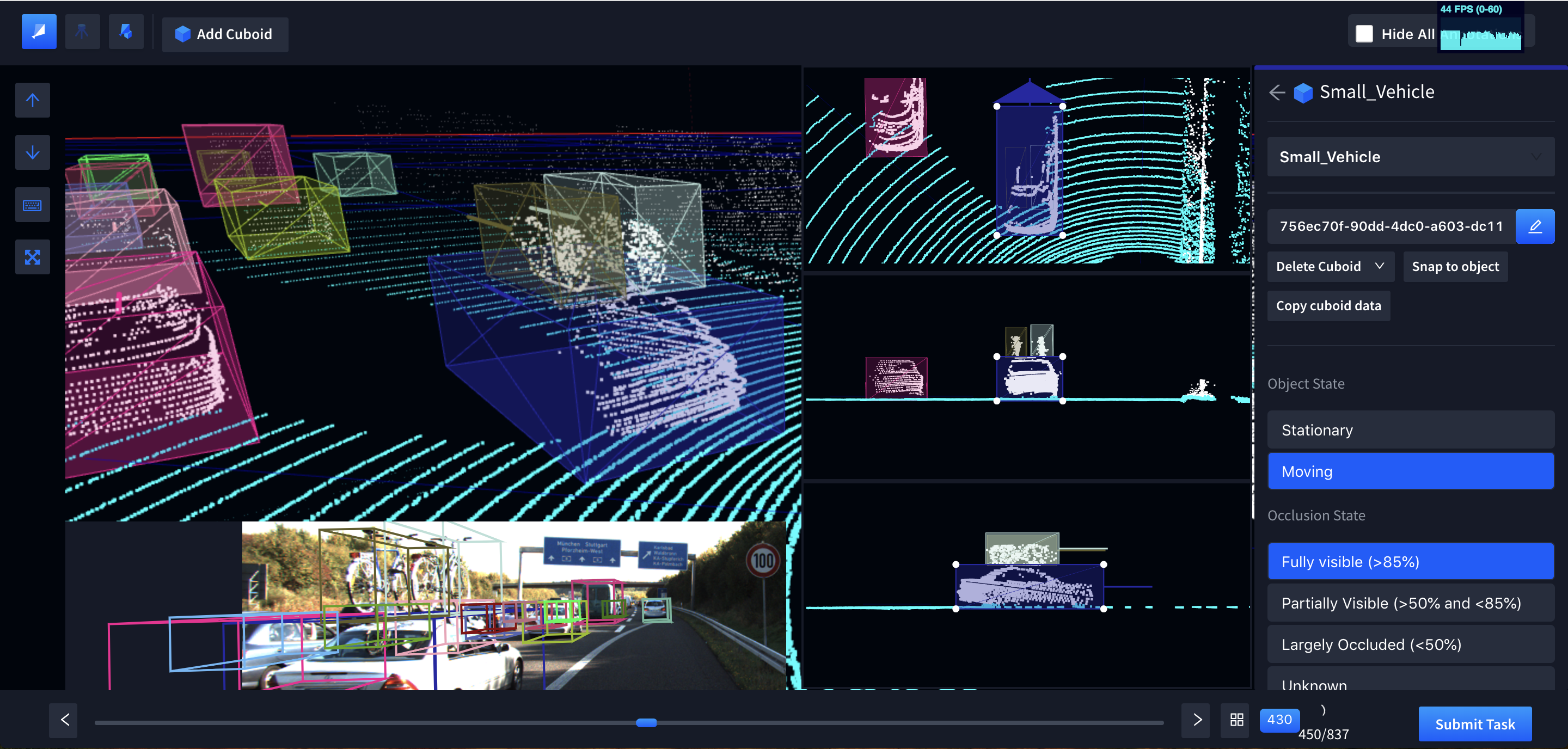

Broadly there exists 3 kind of annotations when 3d point cloud data is present.

- 3D bounding box/Cuboid

- 3D bounding box linked with a corresponding 2D bounding box in the image

- Point cloud segmentation with or without instances

When multiple sensors are used in order to capture a scene, for example when lidar sensors and camera sensors are used, sensor-fusion annotation comes into the picture.

Parameters

project_id: To be passed in the URL Getting project_id

x-api-key: Secret key to be passed as a header Getting x-api-key

{

"reference_id": "001",

"data": {

"sensor_data": {

"frames": [

{

"sensors": [

{

"sensor_id": "lidar",

"data_url": "",

"sensor_pose": {

"position": {

"x": 0,

"y": 0,

"z": 0

},

"heading": {

"w": 1,

"x": 0,

"y": 0,

"z": 0

}

}

},

{

"sensor_id": "18158562",

"data_url": "",

"sensor_pose": {

"position": {

"x": -0.8141599005737696,

"y": 1.6495307329711615,

"z": -1.5230365881437538

},

"heading": {

"w": 0.6867388282287469,

"x": 0.667745267519749,

"y": -0.21162707775631337,

"z": 0.19421642430111224

}

}

}

],

"ego_pose": {},

"frame_id": "0001"

},

{

"sensors": [

{

"sensor_id": "lidar",

"data_url": "",

"sensor_pose": {

"position": {

"x": 0,

"y": 0,

"z": 0

},

"heading": {

"w": 1,

"x": 0,

"y": 0,

"z": 0

}

}

},

{

"sensor_id": "18158562",

"data_url": "",

"sensor_pose": {

"position": {

"x": -0.8141599005737696,

"y": 1.6495307329711615,

"z": -1.5230365881437538

},

"heading": {

"w": 0.6867388282287469,

"x": 0.667745267519749,

"y": -0.21162707775631337,

"z": 0.19421642430111224

}

}

}

],

"ego_pose": {},

"frame_id": "0002"

}

],

"sensor_meta": [

{

"id": "lidar",

"name": "lidar",

"state": "editable",

"modality": "lidar",

"primary_view": true

},

{

"id": "18158562",

"name": "18158562",

"state": "editable",

"modality": "camera",

"primary_view": false,

"intrinsics": {

"cx": 0,

"cY": 0,

"fx": 0,

"fy": 0,

"k1": 0,

"k2": 0,

"k3": 0,

"k4": 0,

"p1": 0,

"p2": 0,

"skew": 0,

"scale_factor": 1

}

}

]

}

},

"tag": "track_3d_bounding_boxes"

}

import requests

import json

"""

Details for creating JOBS,

project_id ->> ID of project in which job needed to be created

x_api_key ->> secret api key to create JOBS

tag ->> You can ask this from playment side

batch_id ->> The batch in which JOB needed to be created

"""

project_id = ''

x_api_key = ''

tag = ''

batch_id = ''

def Upload_jobs( DATA):

base_url = f"https://api.playment.io/v0/projects/{project_id}/jobs"

response = requests.post(base_url, headers={'x-api-key': x_api_key}, json=DATA)

print(response.json())

if response.status_code >= 500:

raise Exception("Something wrong at Playment's end")

if 400 <= response.status_code < 500:

raise Exception("Something wrong!!")

return response.json()

def create_batch(batch_name,batch_description):

headers = {'x-api-key':x_api_key}

url = 'https://api.playment.io/v1/project/{}/batch'.format(project_id)

data = {"project_id":project_id,"label":batch_name,"name":batch_name,"description":batch_description}

response = requests.post(url=url,headers=headers,json=data)

print(response.json())

if response.status_code >= 500:

raise Exception("Something wrong at Playment's end")

if 400 <= response.status_code < 500:

raise Exception("Something wrong!!")

return response.json()['data']['batch_id']

"""

Defining Sensor: This will contain detail about sensor's attributes.

:param _id: This is the sensor's id.

:param name: Name of the sensor.

:param primary_view: Only one of the sensor can have primary_view as true.

:param state(optional): If you want this sensor not to be annotated, provide state as non_editable. Default is editable.

:param modality: This is the type of sensor.

:param intrinsics: In case of a camera modality sensor we will need the sensor intrinsics.

This field should ideally become part of the sensor configuration, and not be sent as part of each Job.

"cx": principal point x value; default 0

"cy": principal point y value; default 0

"fx": focal length in x axis; default 0

"fy": focal length in y axis; default 0

"k1": 1st radial distortion coefficient; default 0

"k2": 2nd radial distortion coefficient; default 0

"k3": 3rd radial distortion coefficient; default 0

"k4": 4th radial distortion coefficient; default 0

"p1": 1st tangential distortion coefficient; default 0

"p2": 2nd tangential distortion coefficient; default 0

"skew": camera skew coefficient; default 0

"scale_factor": The factor by which the image has been downscaled (=2 if original image is twice as

large as the downscaled image)

"""

#Name the sensors, similarly you can define this for multiple cameras

lidar_sensor_id = 'lidar'

#Preparing Lidar Sensor

lidar_sensor = {"id": lidar_sensor_id, "name": "lidar", "primary_view": True, "modality": "lidar","state": "editable"}

#Preparing Camera Sensor for camera_1

camera_1_intrinsics = {

"cx": 0, "cy": 0, "fx": 0, "fy": 0,

"k1": 0, "k2": 0, "k3": 0, "k4": 0, "p1": 0, "p2": 0, "skew": 0, "scale_factor": 1}

camera_1 = {"id": "camera_1", "name": "camera_1", "primary_view": False, "modality": "camera",

"intrinsics": camera_1_intrinsics,"state": "editable"}

#Preparing Camera Sensor for camera_2

camera_2_intrinsics = {

"cx": 0, "cy": 0, "fx": 0, "fy": 0,

"k1": 0, "k2": 0, "k3": 0, "k4": 0, "p1": 0, "p2": 0, "skew": 0, "scale_factor": 1}

camera_2 = {"id": "camera_2", "name": "camera_2", "primary_view": False, "modality": "camera",

"intrinsics": camera_2_intrinsics,"state": "editable"}

#SENSOR META - it contains information about sensors and it is constant across all jobs of same sensor

sensor_meta = [lidar_sensor, camera_1, camera_2]

#Collect frames for every sensor.

lidar_frames = [

"https://example.com/pcd_url_1",

"https://example.com/pcd_url_2"

]

camera_1_frames = [

"https://example.com/image_url_1",

"https://example.com/image_url_2"

]

camera_2_frames = [

"https://example.com/image_url_3",

"https://example.com/image_url_4"

]

#Preparing job creation payload

sensor_data = {"frames" : [],"sensor_meta": sensor_meta}

for i in range(len(lidar_frames)):

reference_id = i

#Collect ego_pose if the data is in world frame of reference

ego_pose = {

"heading": {"w": 1, "x": 0,

"y": 0, "z": 0},

"position": {"x": 0, "y": 0, "z": 0}

}

frame_obj = {"ego_pose" : ego_pose, "frame_id" : str(i),"sensors":[]}

lidar_heading = {"w": w, "x": x, "y": y, "z": z}

lidar_position = {"x": 0, "y": 0, "z": 0}

lidar_sensor = {"data_url": lidar_frames[i], "sensor_id": lidar_sensor_id,

"sensor_pose": {"heading": lidar_heading, "position": lidar_position}}

camera_1_heading = {"w": w, "x": x, "y": y, "z": z}

camera_1_position = {"x": 0, "y": 0, "z": 0}

camera_1_sensor = {"data_url": camera_1_frames[i], "sensor_id": 'camera_1',

"sensor_pose": {"heading": camera_1_heading, "position": camera_1_position}}

camera_2_heading = {"w": w, "x": x, "y": y, "z": z}

camera_2_position = {"x": 0, "y": 0, "z": 0}

camera_2_sensor = {"data_url": camera_2_frames[i], "sensor_id": 'camera_2',

"sensor_pose": {"heading": camera_2_heading, "position": camera_2_position}}

frame_obj['sensors'].append(lidar_sensor)

frame_obj['sensors'].append(camera_1_sensor)

frame_obj['sensors'].append(camera_2_sensor)

sensor_data['frames'].append(frame_obj)

job_payload={"data":{"sensor_data":sensor_data}, "reference_id":str(reference_id)}

data = {"data": job_payload['data'], "reference_id": job_payload['reference_id'], 'tag': tag, "batch_id": batch_id}

def to_dict(obj):

return json.loads(

json.dumps(obj, default=lambda o: getattr(o, '__dict__', str(o)))

)

print(json.dumps(to_dict(data)))

response = Upload_jobs(DATA=data)

# .PCD v0.7 - Point Cloud Data file format

VERSION 0.7

FIELDS x y z

SIZE 4 4 4

TYPE F F F

COUNT 1 1 1

WIDTH 47286

HEIGHT 1

VIEWPOINT 0 0 0 1 0 0 0

POINTS 47286

DATA ascii

5075.773 3756.887 107.923

5076.011 3756.876 107.865

5076.116 3756.826 107.844

5076.860 3756.975 107.648

5077.045 3756.954 107.605

5077.237 3756.937 107.559

5077.441 3756.924 107.511

5077.599 3756.902 107.474

5077.780 3756.885 107.432

5077.955 3756.862 107.391

...

{

"data": {

"job_id": "3f3e8675-ca69-46d7-aa34-96f90fcbb732",

"reference_id": "001",

"tag": "Sample-task"

},

"success": true

}

| Request Key | Description |

|---|---|

| reference_id | reference_id is a unique identifier for a request. We'll fail a request if you've sent another request with the same reference_id previously. This helps us ensure that we don't charge you for work which we've already done for you. |

| tag | Each request should have a tag which tells us what operation needs to be performed on that request. We'll share this tag with you during the integration process. |

| data | Object This has the complete data that is to be annotated |

| data.sensor_data | Object consists of lists of frames and sensor_meta data |

| data.sensor_data.sensor_meta | List Contains a list of all the sensors with each having metadata information likeid : String id of sensor,name : String name of sensormodality : String modality/type of sensorintrinsic : Object In case of a camera modality sensor we will need the sensor intrinsic. Check the sample and definition below |

| data.sensor_data.frames | List of frames each for a particular timestamp in the order of annotation. Each having frame_id, ego_pose and sensors |

| data.sensor_data.frames.[i].frame_id | String Unique identifier of the particular frame |

| data.sensor_data.frames.[i].ego_pose | Object contains the pose of a fixed point on the ego vehicle in the world frame of reference in the form of position (in (x, y, z)) and heading (as quaternion (w, x, y, z)).Check the sample pose object below. In case the pose of the ego vehicle is available in the world frame of reference, The tool can allow annotators to mark objects as stationary and toggle APC (Aggregated point cloud) mode. Usually, if a vehicle is equipped with an IMU or Odometry sensor, then it is possible to get the pose of the ego_vehicle in the world frame of reference. |

| data.sensor_data.frames.[i].sensors | List of all the sensors associated with this particular frame with each havingsensor_id : String id of the sensor. This is a foreign key to the sensor id mentioned in the sensor_meta of the sequence datadata_url : String A link to the file containing the data captured from the sensor for this frame. In order to annotate lidar data, please share point clouds in ascii encoded PCD formatRef ; PCD format sensor_pose : ObjectThis key specifies the pose of respective sensors in a common frame of reference. If the ego_pose is available in the world frame of reference, then you should specify the sensor_pose of individual sensors in the same world frame of reference. In such cases, the pose might change in every frame, as the vehicle moves. If the ego_pose is not available, then all sensor_pose can be specified with respect to a fixed point on the vehicle. In such cases, the pose will not change between frames. |

batch_id Optional | String A batch is a way to organize multiple sequences under one batch_id |

priority_weight Optional | Number Its value ranges from 1 to 10 with 1 being lowest, 10 being highest.Default value 5 |

Secure attachment access

For Secure attachment (data_url here) access and IP whitelisting, refer to Secure attachment access

Note that when only lidar sensor data is available and there is no other sensor data available, the same payload structure can be used keeping only the lidar sensor in sensors and sensor_meta objects.

In order to annotate lidar data, please share point clouds in ascii encoded PCD format

Ref ; PCD format

{

"heading": {"rotation in quaternion format (w,x,y,z)"},

"position": {}

}

If the ego_pose is available in the world frame of reference, then you should specify the sensor_pose of individual sensors, point cloud in the same world frame of reference. In such cases, the pose might change in every frame, as the vehicle moves.

If the ego_pose is not available, then all sensor_pose can be specified with respect to a fixed point on the vehicle. In such cases, the pose will not change between frames.

In case of a camera modality sensor we will need the sensor intrinsics.

"intrinsics": {

"cx": 0,

"cY": 0,

"fx": 0,

"fy": 0,

"k1": 0,

"k2": 0,

"k3": 0,

"k4": 0,

"p1": 0,

"p2": 0,

"skew": 0,

"scale_factor": 1

}

- Definitions:

"cx": principal point x value

"cy": principal point y value

"fx": focal length in x axis

"fy": focal length in y axis

"k1": 1st radial distortion coefficient

"k2": 2nd radial distortion coefficient

"k3": 3rd radial distortion coefficient

"k4": 4th radial distortion coefficient

"p1": 1st tangential distortion coefficient

"p2": 2nd tangential distortion coefficient

"skew": camera skew coefficient

"scale_factor": The factor by which the image has been downscaled (=2 if original image is twice as large as the downscaled image)

Ref: Camera calibration and 3D reconstruction

Response Structure of job creation call

| Response Key | Description |

|---|---|

| data | Object Having job_id, reference_id and tag |

| reference_id | String unique identifier sent for a request |

| job_id | String UUID, unique job id |

| tag | String which tells us what operation needs to be performed on that request. |

job_id is the unique ID of a job. This will be used to get the job result